I had some major lower back and leg issues in 2015 that lasted at least a year. After doing some physical therapy, I was much better off, enough so that I could play Ultimate competitively again.

On Father’s Day weekend 2020, I tweaked my back again. This was a true Father’s Day weekend. My wife was working so I watched the kids the whole weekend. I was fairly deconditioned due to skipping the gym to try to avoid getting the then-novel Covid-19. Biking was sketchy for my back since the initial injury, but that weekend I took the kids around town in a bike trailer. The weight of the kids and their stuff, combined with the fact that I was minimally exercising at the time, was too much for my back to handle. I felt it tighten up, and then kept pushing. By the time we made it home, I knew I was in trouble.

Stress may play a role in pain (see On Pain and Hope), and 2020 was a stressful time for me personally (two young children, helping run a startup that just had its sales pipeline dry up and a cofounder leave), and the world (U.S. election, pandemic, etc.)

My back wasn’t quite as bad off as the first time, but with the pain that I had, most days I was hardly getting out of the house. By December of that year, after starting to see the physical therapist again, I was feeling marginally better, and I decided to get a step tracker to try to walk more consistently to avoid future injury.

Now that I’ve kept it up for several years and feeling better than ever, I wanted to write up some thoughts and tips. If you’re looking to do something like this, I hope that this article gets you off to a good start.

First watch

My first fitness watch was the Garmin Vivofit 4. Here’s my Amazon review of it

The reason I got this watch was the best-in-class battery life (1 year or more) since I disliked taking past watches off to charge them. This one could stay on my wrist for basically a year.

Surprisingly, the best feature for me was the watch’s step goal streak counter. Streaks greatly motivate me. Every day I hit the step goal, it would cheerily chirp about it and show the streak counter advancing by one. Every five days in a row would result in a special animation. Small things, but they kept me going.

At first the watch set an automatically calculated step goal. This was motivating since I started at a low baseline. However, it was calibrated to increase when I hit the goal, and decrease when I missed it. This ended up being hard to reason about and resulted in needing to do more steps every day that I hit the goal, which was not very motivating. (“My reward for hitting my goal today is to make tomorrow even harder?”) So I took a look at my recent steps achieved and set an achievable goal of (I think) 5,000 steps.

For the last few years my step goal has been 6000 steps. I have hit a few streaks of 250 consecutive days, and likely hit the step goal 360 days out of the year for a minimum of 2 million steps per year.

Set a floor, not a ceiling

I thought about increasing the step goal further, but instead decided to try to consistently hit the goal instead. I treated the step goal as a step minimum, not a stretch goal. Or, to put it another way, I set a floor, not a ceiling.

If you’re interested in doing something like this, my key piece of advice is to start and stay lower than you think you need to.

Going for a high daily goal commits you to a lot each day. It makes it a lot harder to stick with the habit. Sure, I could do the vaunted 10,000 daily step day on occasion, but the effort (and time!) needed to achieve this day after day is very high. Time constraint was one of the things I learned while doing the fitness challenge.

When people pick exercise goals, they often imagine working out under ideal conditions. I would recommend imagining how many steps you’ll want to get when:

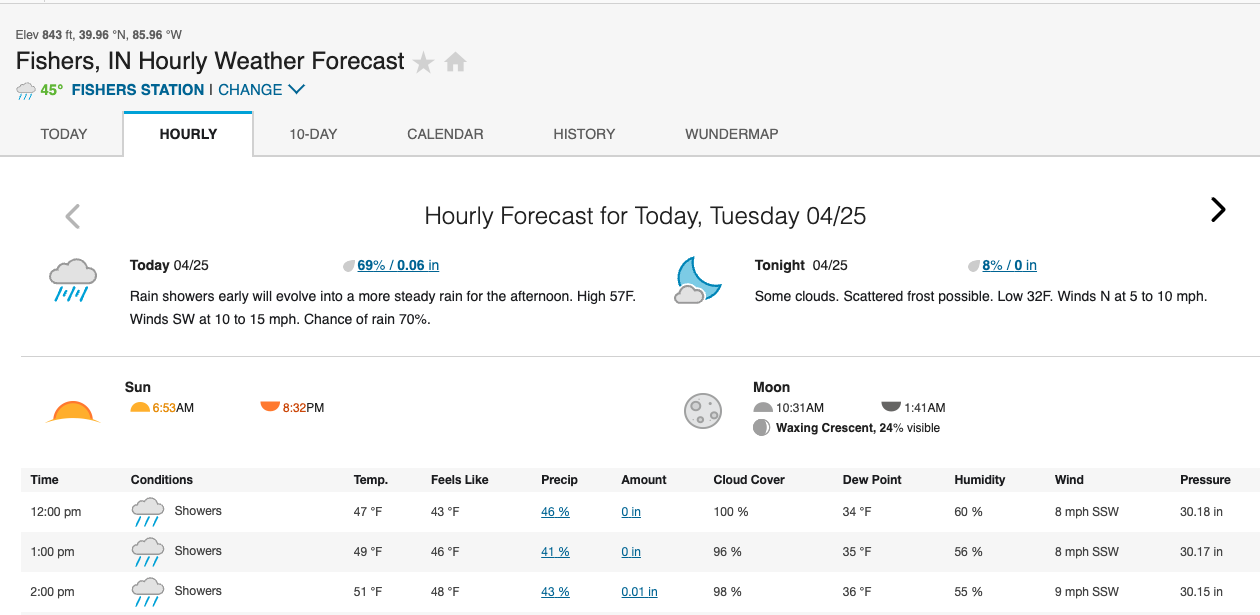

- it’s going to rain all day

- it’s 100 degrees outside and 90% humidity

- there are a couple of inches of snow on the ground and your phone is turning off since it’s so cold

- you have a slight injury or pain

- work or family obligations are higher than normal

- you’re traveling

Also, this is the step minimum. You may be doing other exercise like swimming or cycling or strength work that won’t show up.

Setting a step floor is probably most helpful for folks who work from home or work desk jobs. I feel that it keeps me honest. “Hmm, yeah I only got like 2000 steps today, need to move around a little!”

Typically I pick up a few thousand steps just by walking around the house, doing errands, etc. After doing the step goal for a while, I found out that I walk around 100 steps a minute. I know a few routes around my house that take 5, 10, 15, 20, 30, 45, 60 minutes, so it makes it easier to get close to the goal based on how many more steps I need.

Setting yourself up to succeed

To maintain a long streak, you’ve got to minimize your chances to fail. Identify the most common reasons for failure and try to mitigate.

Forgetfulness is probably my biggest enemy. One thing leads to another and it just sort of slips away. Setting a few alarms/reminders at night and snoozing until I hit the goal has been the most helpful for me. After a while, it becomes part of a nightly mental check.

Since I can’t log steps if the watch is out of battery, I monitor the watch battery and bring the charger on trips or charge it before going if it’s getting close to empty.

Based on the walking volume, I try to get good shoes and purchase new ones every few months. If you get random aches (knees, back, etc.) then it might be a good time to switch.

After being cold a few times and reluctant to walk, I got this gear for the elements like warm gloves and this balaclava after reading this review:

I work at an airport in Indiana. As you can I imagine it is super cold in winter and the winds oh my. It is an airport so nothing blocking those winds. We all survive my dressing in layers and not just a few. On average we are wearing 5 layers minimum. This hat is the best combo the built in neck gator protects my nose and mouth. So that it does not hurt to breath. The hoodie in it keeps the wind out my face. Nothing worse then those super cold nights like we just had with the artic polar. I was working in -25 and the winds make you tear up the cold made those watery eyes freeze yes my eyelashes and all froze like ice cycles….

After a few years and a few Vivofit watches, I wanting something more durable. I ended up upgrading to the Garmin Instinct 2 and that has been a positive change due to the heart rate tracking while offering roughly month-long battery life on a single charge.

If I accidentally forgot or missed the goal by a few steps, I try not to take failure too seriously. I got way more steps than I would have otherwise, I’m helping keep my body healthy, and look at it as a chance to start a new (potentially even longer) streak.

I could see a treadmill being in my future since it would enable me to get steps in inclement weather more easily.

Conclusion

Having a daily step floor has worked for me pretty well. I’ve missed a few days here and there, but feel like it’s been a positive change and hope to continue it for the rest of my life.