Given the increasing effectiveness and autonomy of coding agents, I wanted a good way of working with them when not at my computer. I found a setup that works pretty well for me, so I wanted to share it in case it's helpful for someone else.

I've been using Claude Code as my primary agentic coding tool for about 6 months now, so all examples here will use that, although I'm guessing it could be used with others. Claude Code has been solid and continually improving, especially with Opus 4.5. (But I guess ask me in a few months… :D)

Tailscale

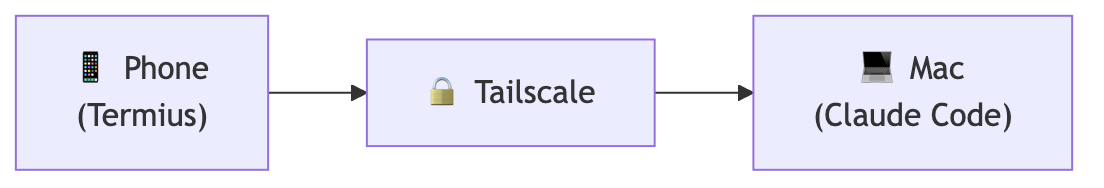

The key part of a setup like this is having some computer that has Claude Code set up, and then being able to securely connect to it.

My current overall approach is to set up a secure network through Tailscale so I can SSH to it.

I set up my main computer (currently M3 Max Macbook Pro) to run Tailscale. First, run the Tailscale daemon with $ sudo tailscaled. (This changed my computer's hostname for some reason, which was not super desirable.)

Then configure your computer to accept SSH connections from anyone in your tailnet (basically your authenticated devices):

$ tailscale set --ssh

It seems like Tailscale has two (three!) Mac interfaces:

- a native Mac app (used for connecting to the Tailscale network)

- the same, but installable through the app store

- and one that's a CLI interface.

You need the CLI version to be able to run the tailscale ssh command, and the versions seem to need to agree with one another or you get warnings and possibly issues: